Cran R Reading Chunks From a Single Text File in Parallel?

Efficient input/output

This chapter explains how to efficiently read and write data in R. Input/output (I/O) is the technical term for reading and writing data: the procedure of getting information into a particular computer arrangement (in this case R) so exporting it to the 'outside earth' once more (in this example as a file format that other software can read). Data I/O will be needed on projects where data comes from, or goes to, external sources. However, the bulk of R resource and documentation start with the optimistic supposition that your data has already been loaded, ignoring the fact that importing datasets into R, and exporting them to the globe exterior the R ecosystem, can be a time-consuming and frustrating procedure. Tricky, slow or ultimately unsuccessful information I/O tin can cripple efficiency correct at the offset of a project. Conversely, reading and writing your data efficiently will make your R projects more probable to succeed in the outside globe.

The starting time department introduces rio, a 'meta package' for efficiently reading and writing data in a range of file formats. rio requires simply two intuitive functions for data I/O, making it efficient to learn and use. Adjacent nosotros explore in more than item efficient functions for reading in files stored in mutual plain text file formats from the readr and data.table packages. Binary formats, which can dramatically reduce file sizes and read/write times, are covered next.

With the accelerating digital revolution and growth in open up data, an increasing proportion of the world'southward data can be downloaded from the internet. This trend is set to continue, making department 5.5, on downloading and importing data from the web, important for 'futurity-proofing' your I/O skills. The benchmarks in this chapter demonstrate that choice of file format and packages for information I/O can have a huge touch on computational efficiency.

Earlier reading in a single line of information, it is worth considering a general principle for reproducible data management: never modify raw information files. Raw data should be seen as read-only, and contain data about its provenance. Keeping the original file name and commenting on its origin are a couple of ways to improve reproducibility, fifty-fifty when the data are not publicly available.

Prerequisites

R can read data from a multifariousness of sources. We begin by discussing the generic bundle rio that handles a wide multifariousness of data types. Special attention is paid to CSV files, which leads to the readr and data.tabular array packages. The relatively new bundle feather is introduced as a binary file format, that has cross-language back up.

library("rio") library("readr") library("information.table") library("feather") We also use the WDI packet to illustrate accessing online data sets

Top 5 tips for efficient data I/O

-

If possible, go along the names of local files downloaded from the internet or copied onto your estimator unchanged. This will aid you trace the provenance of the data in the future.

-

R'south native file format is

.Rds. These files can be imported and exported usingreadRDS()andsaveRDS()for fast and infinite efficient data storage. -

Use

import()from the rio package to efficiently import data from a wide range of formats, avoiding the hassle of loading format-specific libraries. -

Use the readr or data.table equivalents of

read.table()to efficiently import large text files. -

Use

file.size()andobject.size()to keep track of the size of files and R objects and accept activeness if they go as well big.

Versatile data import with rio

rio is a 'A Swiss-Regular army Pocketknife for Data I/O'. rio provides easy-to-use and computationally efficient functions for importing and exporting tabular data in a range of file formats. As stated in the package's vignette, rio aims to "simplify the process of importing data into R and exporting data from R." The vignette goes on to explain how many of the functions for data I/O described in R'due south Data Import/Export manual are out of appointment (for example referring to WriteXLS only not the more contempo readxl packet) and hard to learn.

This is why rio is covered at the outset of this affiliate: if you but desire to get data into R, with a minimum of fourth dimension learning new functions, there is a fair chance that rio can help, for many common file formats. At the time of writing, these include .csv, .feather, .json, .dta, .xls, .xlsx and Google Sheets (see the package's github page for up-to-date information). Below we illustrate the cardinal rio functions of import() and export():

library("rio") # Specify a file fname = system.file("extdata/voc_voyages.tsv", package = "efficient") # Import the file (uses the fread function from data.tabular array) voyages = import(fname) # Export the file every bit an Excel spreadsheet export(voyages, "voc_voyages.xlsx") In that location was no demand to specify the optional format argument for data import and consign functions because this is inferred by the suffix, in the above example .tsv and .xlsx respectively. You can override the inferred file format for both functions with the format argument. Yous could, for example, create a comma-delimited file chosen voc_voyages.xlsx with export(voyages, "voc_voyages.xlsx", format = "csv"). Notwithstanding, this would not be a good idea: it is important to ensure that a file's suffix matches its format.

To provide some other example, the lawmaking clamper below downloads and imports equally a data frame information about the countries of the globe stored in .json (downloading data from the internet is covered in more item in Section 5.5):

capitals = import("https://github.com/mledoze/countries/raw/master/countries.json") The power to import and use .json data is becoming increasingly common as it a standard output format for many APIs. The jsonlite and geojsonio packages accept been adult to brand this every bit easy as possible.

Exercises

-

The terminal line in the code chunk in a higher place shows a not bad characteristic of rio and some other packages: the output format is determined past the suffix of the file-name, which make for concise code. Effort opening the

voc_voyages.xlsxfile with an editor such as LibreOffice Calc or Microsoft Excel to ensure that the consign worked, before removing this rather inefficient file format from your system:file.remove("voc_voyages.xlsx") -

Try saving the

voyagesdata frames into 3 other file formats of your choosing (run acrossvignette("rio")for supported formats). Try opening these in external programs. Which file formats are more portable? -

As a bonus exercise, create a simple benchmark to compare the write times for the different file formats used to complete the previous exercise. Which is fastest? Which is the near space efficient?

Plain text formats

'Plain text' data files are encoded in a format (typically UTF-8) that can exist read by humans and computers alike. The great thing most manifestly text is their simplicity and their ease of use: any programming language tin can read a plain text file. The virtually common evidently text format is .csv, comma-separated values, in which columns are separated by commas and rows are separated by line breaks. This is illustrated in the unproblematic example beneath:

Person, Nationality, Country of Birth Robin, British, England Colin, British, Scotland In that location is ofttimes more than 1 way to read data into R and .csv files are no exception. The method y'all cull has implications for computational efficiency. This section investigates methods for getting plain text files into R, with a focus on three approaches: base R's plain text reading functions such every bit read.csv(); the data.table approach, which uses the function fread(); and the newer readr package which provides read_csv() and other read_*() functions such as read_tsv(). Although these functions perform differently, they are largely cross-compatible, equally illustrated in the below clamper, which loads data on the concentration of CO2 in the atmosphere over time:

In full general, you should never "paw-write" a CSV file. Instead, you should use write.csv() or an equivalent function. The Cyberspace Applied science Task Forcefulness has the CSV definition that facilitates sharing CSV files betwixt tools and operating systems.

df_co2 = read.csv("extdata/co2.csv") df_co2_readr = readr:: read_csv("extdata/co2.csv") #> Warning: Missing column names filled in: 'X1' [i] #> #> ── Column specification ──────────────────────────────────────────────────────── #> cols( #> X1 = col_double(), #> time = col_double(), #> co2 = col_double() #> ) df_co2_dt = data.tabular array:: fread("extdata/co2.csv") Note that a office 'derived from' another in this context means that it calls another function. The functions such as read.csv() and read.delim() in fact are wrappers around read.table(). This can exist seen in the source code of read.csv(), for example, which shows that the function is roughly the equivalent of read.table(file, header = True, sep = ",").

Although this section is focussed on reading text files, it demonstrates the wider principle that the speed and flexibility advantages of additional read functions tin be showtime past the disadvantage of boosted package dependencies (in terms of complication and maintaining the code) for small datasets. The real benefits kick in on large datasets. Of class, there are some data types that require a certain package to load in R: the readstata13 package, for case, was adult solely to read in .dta files generated by versions of Stata 13 and to a higher place.

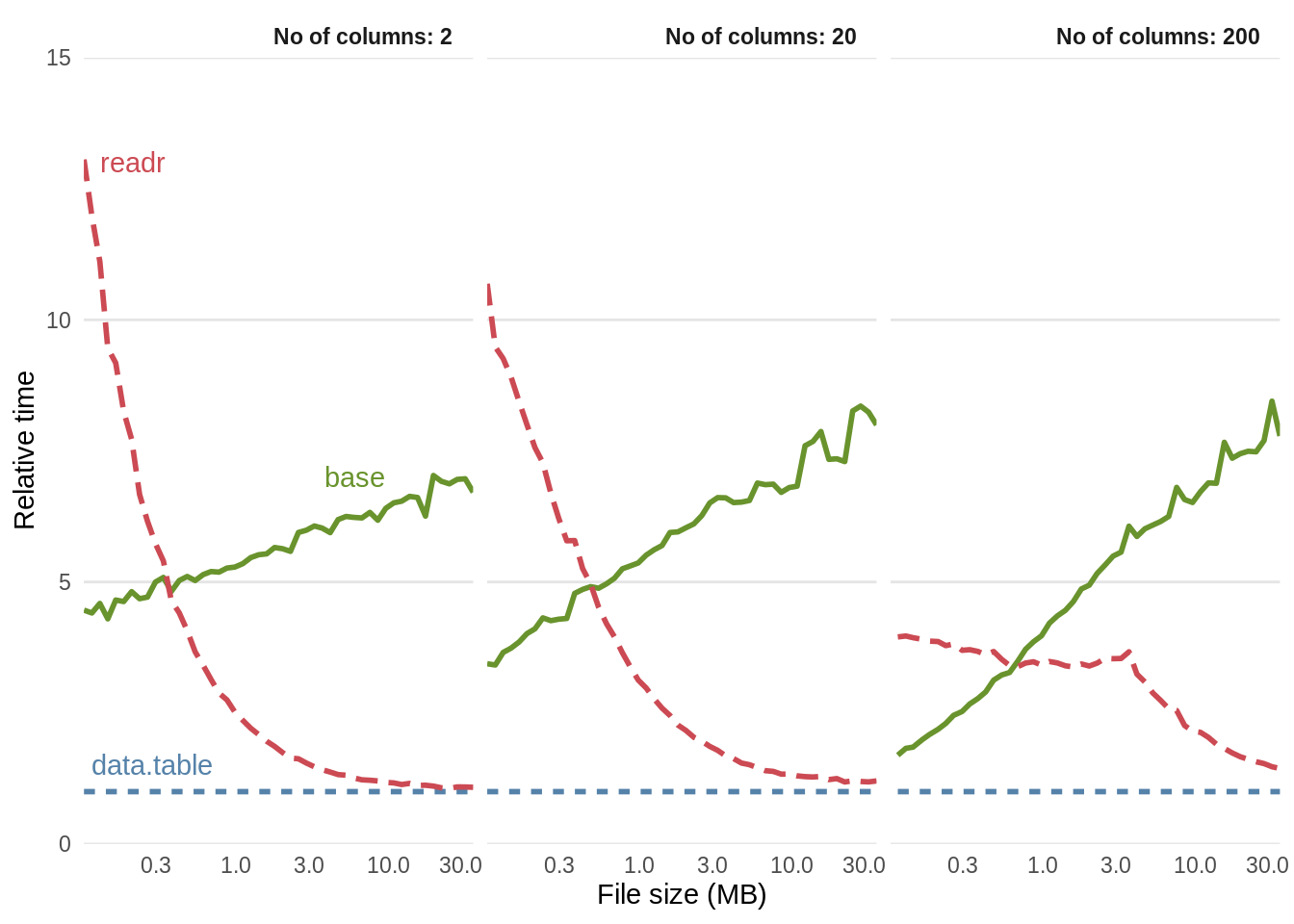

Figure v.i demonstrates that the relative functioning gains of the data.table and readr approaches increase with data size, especially for information with many rows. Beneath around \(i\) MB read.csv() is really faster than read_csv() while fread is much faster than both, although these savings are likely to be inconsequential for such minor datasets.

For files beyond \(100\) MB in size fread() and read_csv() can be expected to be around 5 times faster than read.csv(). This efficiency gain may be inconsequential for a 1-off file of \(100\) MB running on a fast computer (which still takes less than a minute with read.csv()), just could represent an important speed-upwardly if you frequently load large text files.

Figure 5.one: Benchmarks of base of operations, data.table and readr approches for reading csv files, using the functions read.csv(), fread() and read_csv(), respectively. The facets ranging from \(2\) to \(200\) represent the number of columns in the csv file.

When tested on a big (\(4\)GB) .csv file it was constitute that fread() and read_csv() were near identical in load times and that read.csv() took around \(v\) times longer. This consumed more than \(x\)GB of RAM, making it unsuitable to run on many computers (see Section viii.3 for more than on retention). Note that both readr and base methods can be made significantly faster by pre-specifying the column types at the commencement (see below). Further details are provided by the help in ?read.table.

read.csv(file_name, colClasses = c("numeric", "numeric")) In some cases with R programming there is a trade-off between speed and robustness. This is illustrated below with reference to differences in how the readr, information.table and base R approaches handle unexpected values. Figure 5.i highlights the benefit of switching to fread() and (somewhen) to read_csv() as the dataset size increases. For a minor (\(1\)MB) dataset: fread() is around \(5\) times faster than base R.

Differences between fread() and read_csv()

The file voc_voyages was taken from a dataset on Dutch naval expeditions used with permission from the CWI Database Architectures Grouping. The data is described more fully at monetdb.org. From this dataset nosotros primarily use the 'voyages' table which lists Dutch shipping expeditions past their date of difference.

fname = organisation.file("extdata/voc_voyages.tsv", package = "efficient") voyages_base = read.delim(fname) When we run the equivalent operation using readr,

voyages_readr = readr:: read_tsv(fname) #> #> ── Cavalcade specification ──────────────────────────────────────────────────────── #> cols( #> .default = col_character(), #> number = col_double(), #> number_sup = col_logical(), #> trip = col_double(), #> tonnage = col_double(), #> hired = col_logical(), #> departure_date = col_date(format = ""), #> cape_arrival = col_date(format = ""), #> cape_departure = col_date(format = ""), #> cape_call = col_logical(), #> arrival_date = col_date(format = ""), #> next_voyage = col_double() #> ) #> ℹ Utilize `spec()` for the full column specifications. #> Warning: 77 parsing failures. #> row col expected actual file #> 1023 hired 1/0/T/F/True/FALSE 1664 '/home/travis/R/Library/efficient/extdata/voc_voyages.tsv' #> 1025 hired one/0/T/F/True/Simulated 1664 '/home/travis/R/Library/efficient/extdata/voc_voyages.tsv' #> 1030 hired one/0/T/F/TRUE/Faux 1664 '/habitation/travis/R/Library/efficient/extdata/voc_voyages.tsv' #> 1034 hired 1/0/T/F/Truthful/FALSE 1664/5 '/home/travis/R/Library/efficient/extdata/voc_voyages.tsv' #> 1035 hired one/0/T/F/Truthful/Fake 1665 '/home/travis/R/Library/efficient/extdata/voc_voyages.tsv' #> .... ..... .................. ...... .......................................................... #> See issues(...) for more details. a warning is raised regarding row 1023 in the hired variable. This is because read_*() decides what course each variable is based on the kickoff \(1000\) rows, rather than all rows, every bit base of operations read.*() functions do. Printing the offending chemical element

voyages_base$hired[1023] # a grapheme #> [ane] "1664" voyages_readr$hired[1023] # an NA: text cannot be converted to logical(i.due east read_*() interprets this column as logical) #> [1] NA Reading the file using data.tabular array

# Verbose warnings non shown voyages_dt = data.table:: fread(fname) generates 5 warning letters stating that columns 2, 4, nine, 10 and eleven were Bumped to type character on data row ..., with the offending rows printed in identify of .... Instead of changing the offending values to NA, as readr does for the built column (9), fread() automatically converts whatsoever columns it thought of as numeric into characters. An boosted characteristic of fread is that information technology can read-in a selection of the columns, either by their alphabetize or name, using the select argument. This is illustrated below by reading in only one-half of the columns (the first eleven) from the voyages dataset and comparing the result with fread()'ing all the columns in.

microbenchmark(times = 5, with_select = data.table:: fread(fname, select = 1 : 11), without_select = data.table:: fread(fname) ) #> Unit: milliseconds #> expr min lq mean median uq max neval #> with_select xi.3 eleven.4 eleven.5 xi.4 11.4 12.0 v #> without_select 15.3 15.4 15.4 15.4 15.5 15.half dozen v To summarise, the differences between base, readr and information.table functions for reading in data go beyond code execution times. The functions read_csv() and fread() boost speed partially at the expense of robustness because they determine column classes based on a minor sample of bachelor data. The similarities and differences between the approaches are summarised for the Dutch aircraft data in Table 5.1.

| number | boatname | congenital | departure_date | Role |

|---|---|---|---|---|

| integer | character | character | character | base |

| numeric | character | character | Date | readr |

| integer | character | character | IDate, Appointment | data.tabular array |

Tabular array v.1 shows four main similarities and differences between the three read types of read role:

-

For uniform information such as the 'number' variable in Table five.i, all reading methods yield the same result (integer in this case).

-

For columns that are manifestly characters such every bit 'boatname', the base of operations method results in factors (unless

stringsAsFactorsis set toImitation) whereasfread()andread_csv()functions return characters. -

For columns in which the first 1000 rows are of one type but which contain anomalies, such as 'built' and 'departure_data' in the shipping case,

fread()coerces the consequence to characters.read_csv()and siblings, by contrast, keep the class that is right for the get-go 1000 rows and sets the dissonant records toNA. This is illustrated in v.1, whereread_tsv()produces anumericform for the 'built' variable, ignoring the non-numeric text in row 2841. -

read_*()functions generate objects of classtbl_df, an extension of thedata.framegrade, every bit discussed in Section 6.four.fread()generates objects of gradedata.table(). These can be used as standard data frames merely differ subtly in their behaviour.

An additional difference is that read_csv() creates data frames of class tbl_df, and data.frame. This makes no practical deviation, unless the tibble package is loaded, as described in section half dozen.2 in the next chapter.

The wider point associated with these tests is that functions that salve time tin also lead to additional considerations or complexities for your workflow. Taking a look at what is going on 'under the hood' of fast functions to increase speed, as we have done in this section, can help empathise the knock-on consequences of choosing fast functions over slower functions from base R.

Preprocessing text exterior R

In that location are circumstances when datasets become likewise large to read direct into R. Reading in a \(4\) GB text file using the functions tested higher up, for case, consumes all available RAM on a \(16\) GB machine. To overcome this limitation, external stream processing tools tin can be used to preprocess large text files. The following control, using the Linux control line 'vanquish' (or Windows based Linux shell emulator Cygwin) control split, for example, volition break a large multi GB file into many chunks, each of which is more than manageable for R:

The consequence is a serial of files, set to 100 MB each with the -b100m argument in the above code. By default these volition exist called xaa, xab and can be read in ane chunk at a time (eastward.k. using read.csv(), fread() or read_csv(), described in the previous section) without crashing most modernistic computers.

Splitting a large file into individual chunks may allow it to be read into R. This is not an efficient fashion to import large datasets, however, because it results in a not-random sample of the data this way. A more than efficient, robust and scalable way to work with large datasets is via databases, covered in Department half dozen.6 in the side by side chapter.

Binary file formats

In that location are limitations to patently text files. Even the trusty CSV format is "restricted to tabular information, lacks type-safety, and has limited precision for numeric values" (Eddelbuettel, Stokely, and Ooms 2016). Once you take read-in the raw data (e.chiliad. from a plain text file) and tidied it (covered in the next affiliate), information technology is common to want to save information technology for future use. Saving it after tidying is recommended, to reduce the risk of having to run all the data cleaning code again. We recommend saving tidied versions of large datasets in one of the binary formats covered in this department: this will decrease read/write times and file sizes, making your information more portable.13

Unlike plain text files, data stored in binary formats cannot be read past humans. This allows space-efficient data compression but means that the files volition be less language agnostic. R's native file format, .Rds, for example may be difficult to read and write using external programs such equally Python or LibreOffice Calc. This section provides an overview of binary file formats in R, with benchmarks to testify how they compare with the plainly text format .csv covered in the previous section.

Native binary formats: Rdata or Rds?

.Rds and .RData are R's native binary file formats. These formats are optimised for speed and compression ratios. Merely what is the deviation between them? The following code chunk demonstrates the key difference between them:

save(df_co2, file = "extdata/co2.RData") saveRDS(df_co2, "extdata/co2.Rds") load("extdata/co2.RData") df_co2_rds = readRDS("extdata/co2.Rds") identical(df_co2, df_co2_rds) #> [one] True The beginning method is the nigh widely used. It uses the salve() office which takes whatsoever number of R objects and writes them to a file, which must be specified past the file = statement. save() is like relieve.image(), which saves all the objects currently loaded in R.

The 2d method is slightly less used just we recommend it. Apart from existence slightly more than concise for saving unmarried R objects, the readRDS() role is more flexible: as shown in the subsequent line, the resulting object can be assigned to any proper name. In this instance we chosen it df_co2_rds (which we evidence to exist identical to df_co2, loaded with the load() control) but we could accept called it anything or simply printed it to the panel.

Using saveRDS() is good practice because it forces you to specify object names. If yous use save() without care, you could forget the names of the objects you saved and accidentally overwrite objects that already existed.

The plumage file format

Feather was developed as a collaboration between R and Python developers to create a fast, low-cal and language agnostic format for storing information frames. The code clamper below shows how it can be used to save and then re-load the df_co2 dataset loaded previously in both R and Python:

library("feather") write_feather(df_co2, "extdata/co2.feather") df_co2_feather = read_feather("extdata/co2.feather") import plume path = 'information/co2.feather' df_co2_feather = feather.read_dataframe(path) Benchmarking binary file formats

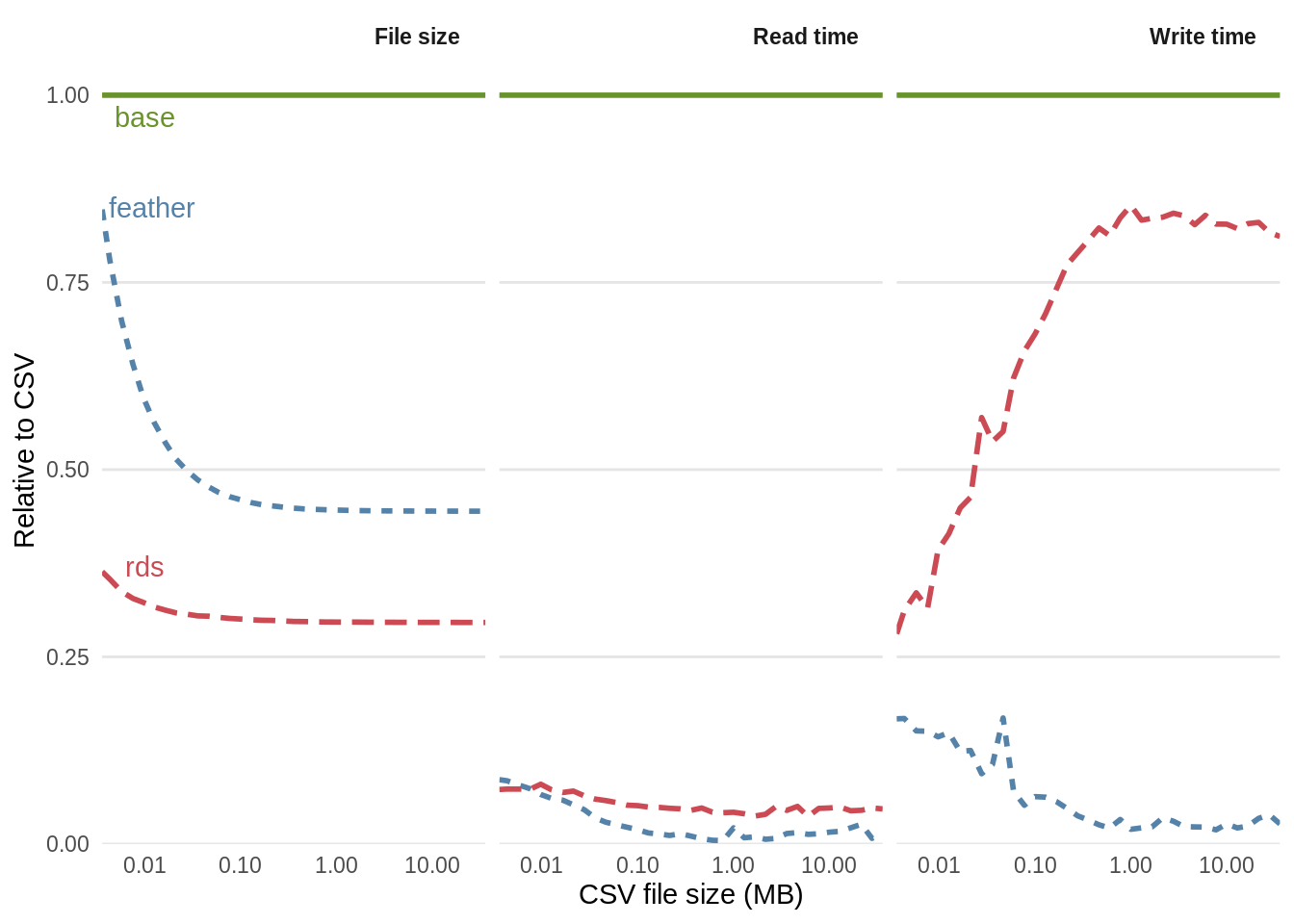

Nosotros know that binary formats are advantageous from infinite and read/write time perspectives, but how much and so? The benchmarks in this section, based on large matrices containing random numbers, are designed to help answer this question. Figure v.2 shows the relative efficiency gains of the feather and Rds formats, compared with base CSV. From left to right, figure 5.2 shows benefits in terms of file size, read times, and write times.

In terms of file size, Rds files perform the best, occupying just over a quarter of the difficult disc space compared with the equivalent CSV files. The equivalent plumage format also outperformed the CSV format, occupying around half the disc infinite.

#> Warning: `frame_data()` was deprecated in tibble 2.0.0. #> Please utilise `tribble()` instead.

Figure five.two: Comparison of the operation of binary formats for reading and writing datasets with 20 column with the plain text format CSV. The functions used to read the files were read.csv(), readRDS() and feather::read_feather() respectively. The functions used to write the files were write.csv(), saveRDS() and feather::write_feather().

The results of this simple disk usage benchmark bear witness that saving data in a compressed binary format can save infinite and if your data will be shared on-line, reduce information download time and bandwidth usage. Just how does each method compare from a computational efficiency perspective? The read and write times for each file format are illustrated in the eye and right paw panels of five.2.

The results show that file size is not a reliable predictor of information read and write times. This is due to the computational overheads of compression. Although plume files occupied more disc infinite, they were roughly equivalent in terms of read times: the functions read_feather() and readRDS() were consistently around 10 times faster than read.csv(). In terms of write times, plumage excels: write_feather() was around ten times faster than write.csv(), whereas saveRDS() was only effectually 1.2 times faster.

Notation that the functioning of different file formats depends on the content of the data being saved. The benchmarks above showed savings for matrices of random numbers. For real life data, the results would be quite unlike. The voyages dataset, saved as an Rds file, occupied less than a quarter the disc space equally the original TSV file, whereas the file size was larger than the original when saved as a feather file!

Protocol Buffers

Google's Protocol Buffers offers a portable, efficient and scalable solution to binary data storage. A recent bundle, RProtoBuf, provides an R interface. This approach is non covered in this book, as it is new, advanced and not (at the time of writing) widely used in the R community. The approach is discussed in detail in a newspaper on the subject, which as well provides an excellent overview of the issues associated with dissimilar file formats (Eddelbuettel, Stokely, and Ooms 2016).

Getting data from the net

The lawmaking clamper beneath shows how the functions download.file() and unzip() can exist used to download and unzip a dataset from the internet. (Since R three.two.3 the base function download.file() can exist used to download from secure (https://) connections on any operating organization.) R can automate processes that are ofttimes performed manually, e.g. through the graphical user interface of a spider web browser, with potential advantages for reproducibility and programmer efficiency. The result is data stored neatly in the data directory ready to be imported. Note we deliberately kept the file name intact, enhancing agreement of the data's provenance then future users can speedily discover out where the data came from. Note also that part of the dataset is stored in the efficient package. Using R for bones file direction can help create a reproducible workflow, as illustrated below.

url = "https://www.monetdb.org/sites/default/files/voc_tsvs.zip" download.file(url, "voc_tsvs.aught") # download file unzip("voc_tsvs.nada", exdir = "data") # unzip files file.remove("voc_tsvs.goose egg") # tidy upward by removing the nil file This workflow applies every bit to downloading and loading single files. Note that one could make the code more than curtailed by replacing the second line with df = read.csv(url). However, we recommend downloading the file to disk then that if for some reason information technology fails (eastward.g. if you lot would like to skip the first few lines), you don't take to continue downloading the file over and over once more. The code beneath downloads and loads data on atmospheric concentrations of CO2. Note that this dataset is likewise available from the datasets package.

url = "https://vincentarelbundock.github.io/Rdatasets/csv/datasets/co2.csv" download.file(url, "extdata/co2.csv") df_co2 = read_csv("extdata/co2.csv") There are now many R packages to assist with the download and import of information. The organisation rOpenSci supports a number of these. The case below illustrates this using the WDI package (not supported by rOpenSci) to access Globe Bank information on CO2 emissions in the transport sector:

library("WDI") WDIsearch("CO2") # search for data on a topic co2_transport = WDI(indicator = "EN.CO2.TRAN.ZS") # import data There will be situations where you cannot download the information straight or when the data cannot exist made available. In this example, merely providing a comment relating to the data's origin (due east.one thousand.# Downloaded from http://example.com) earlier referring to the dataset can greatly better the utility of the code to yourself and others.

In that location are a number of R packages that provide more than advanced functionality than simply downloading files. The CRAN task view on Web technologies provides a comprehensive list. The two packages for interacting with web pages are httr and RCurl. The one-time parcel provides (a relatively) user-friendly interface for executing standard HTTP methods, such as Become and Mail service. It also provides back up for web authentication protocols and returns HTTP status codes that are essential for debugging. The RCurl bundle focuses on lower-level back up and is particularly useful for web-based XML back up or FTP operations.

Accessing data stored in packages

Most well documented packages provide some example data for you to play with. This can help demonstrate use cases in specific domains, that uses a particular data format. The command data(package = "package_name") will testify the datasets in a packet. Datasets provided by dplyr, for example, can be viewed with data(packet = "dplyr").

Raw data (i.e. data which has not been converted into R's native .Rds format) is usually located within the sub-folder extdata in R (which corresponds to inst/extdata when developing packages. The function system.file() outputs file paths associated with specific packages. To come across all the external files inside the readr package, for example, ane could use the following command:

list.files(organisation.file("extdata", package = "readr")) #> [1] "challenge.csv" "epa78.txt" "instance.log" #> [4] "fwf-sample.txt" "massey-rating.txt" "mtcars.csv" #> [7] "mtcars.csv.bz2" "mtcars.csv.zip" Farther, to 'expect around' to see what files are stored in a particular parcel, 1 could type the following, taking reward of RStudio'due south intellisense file completion capabilities (using copy and paste to enter the file path):

organization.file(package = "readr") #> [1] "/home/robin/R/x86_64-pc-linux-gnu-library/3.3/readr" Hit Tab after the second control should trigger RStudio to create a miniature pop-upwards box listing the files within the binder, as illustrated in figure 5.iii.

Figure v.3: Discovering files in R packages using RStudio'due south 'intellisense'.

References

Eddelbuettel, Dirk, Murray Stokely, and Jeroen Ooms. 2016. "RProtoBuf: Efficient Cross-Language Data Serialization in R." Journal of Statistical Software 71 (1): 1–24. https://doi.org/10.18637/jss.v071.i02.

Source: https://csgillespie.github.io/efficientR/input-output.html

0 Response to "Cran R Reading Chunks From a Single Text File in Parallel?"

Enregistrer un commentaire